Over the past decade, higher education institutions across the globe have dramatically evolved their approach to learning assessments. Institutions have built quality cultures and focused on building systems and processes supporting continuous improvement. The following case studies highlight best practices from four such institutions, where systems and processes have been built to thoughtfully measure student achievement and improve the curriculum to foster stronger performance. In collaboration with Peregrine, these institutions showcase how they embed assessment seamlessly into their programs and utilize data to demonstrate outcomes.

Case Studies

Utica University

Utica University in New York emphasizes the narrative behind the data. They concentrate on key program success indicators, transforming raw data into actionable insights. At Utica, they wanted to focus on a few key indicators of program success:

- Knowledge levels of incoming and graduating students on key program learning goals.

- Student perception of the utility of learning goals and their satisfaction with program delivery.

- Program-level achievement of learning outcomes or opportunities for improvement.

Measure 1: Knowledge Levels

At the outset and conclusion of their programs, both undergraduate and MBA students in the Business and Justice Studies (BJS) department undertake Peregrine Inbound and Outbound exams. These exams track student progress while also informing curriculum redesign, therefore ensuring the achievement of learning outcomes.

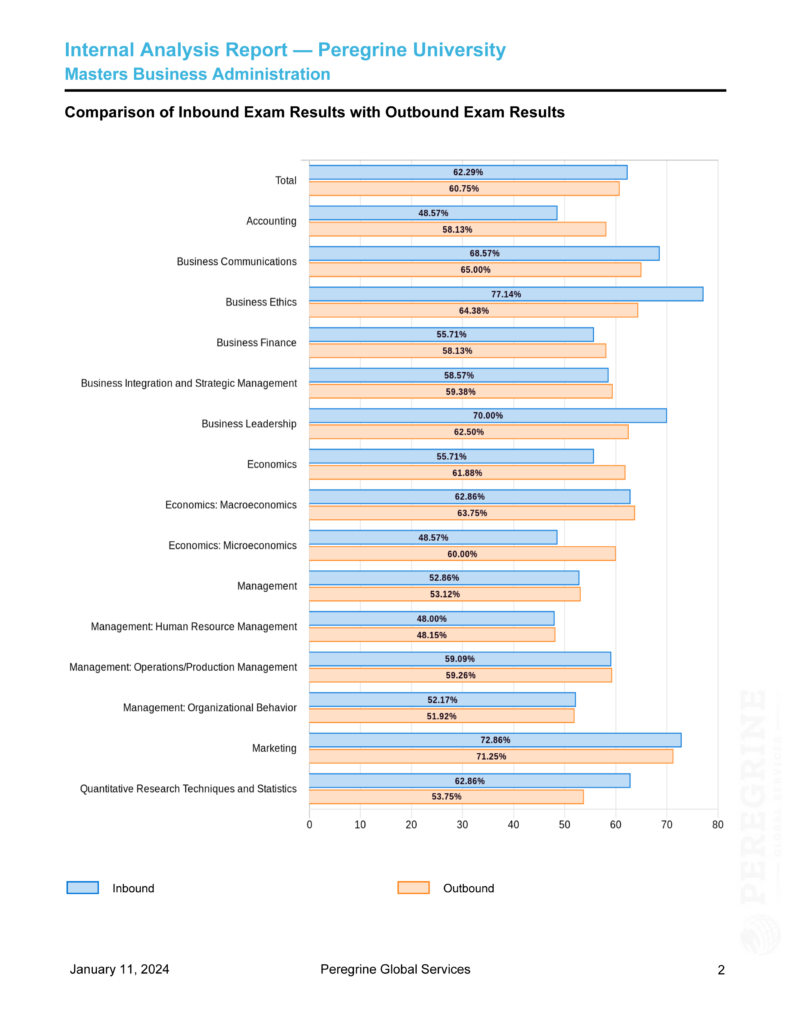

Inbound and Outbound assessments help measure learner progress throughout an academic program. Inbound assessment, conducted at the beginning of an academic program, gauges students’ initial knowledge and skill levels. The Outbound assessment, administered at the end of the program, measures the knowledge and skills students have gained. By comparing the results of these two assessments, educators can effectively evaluate the impact of their curriculum and teaching methods. See Figure 1 which includes an image of one of the ways Inbound and Outbound exam results are illustrated on Peregrine’s Internal Analysis Report.

This practice is beneficial for several reasons:

- It provides tangible data on student learning progress, enabling educators to identify which curriculum areas are effective and which need improvement. This data-driven approach ensures the curriculum remains relevant, challenging, and aligned with educational objectives.

- It helps tailor teaching strategies to meet future cohorts’ needs better, ensuring that each new group of students benefits from continuously improved educational practices.

- It aligns with the principles of outcome-based education, which focuses on what students can do after they complete a program.

By assessing students’ initial and final knowledge levels, institutions can ensure that their programs are effectively equipping students with the necessary skills and knowledge required in their respective fields.

A notable example includes the substantial curriculum enhancement in the MBA program at Utica University. Identified through the review of Outbound exam scores, learners were underperforming in the topic of Human Resource Management when compared to learners at peer institutions. Upon a comprehensive review of their program, they were able to attribute the minimal increase in scores between the Inbound and Outbound exams to the fact that human resource concepts were not reinforced throughout the MBA program. In response to these findings, Utica University included a Human Resource Management (HRM) course in the core curriculum of the MBA program to address this gap and ensure that students graduate with well-rounded knowledge in this crucial area.

Measure 2: Student Perception

For the second measure, student perception of their learning, Utica relied on an internal survey using a Google Form linked to the LMS for courses at the beginning and end of a program. Despite its well-intentioned design, this approach encountered a significant hurdle: student engagement. The surveys, although easily accessible through the LMS and actively promoted by faculty, suffered from low response rates. This lack of participation hindered the university’s ability to gather comprehensive data.

Addressing this challenge, Utica’s assessment team collaborated with Peregrine to embed the student survey directly into the Peregrine exams. This integration positioned the survey at the very beginning of the Peregrine exam, a mandatory component of the program, thus ensuring student engagement was at its peak. By doing so, students were prompted to complete the survey before receiving any test questions, leading to a much higher response rate than previously achieved.

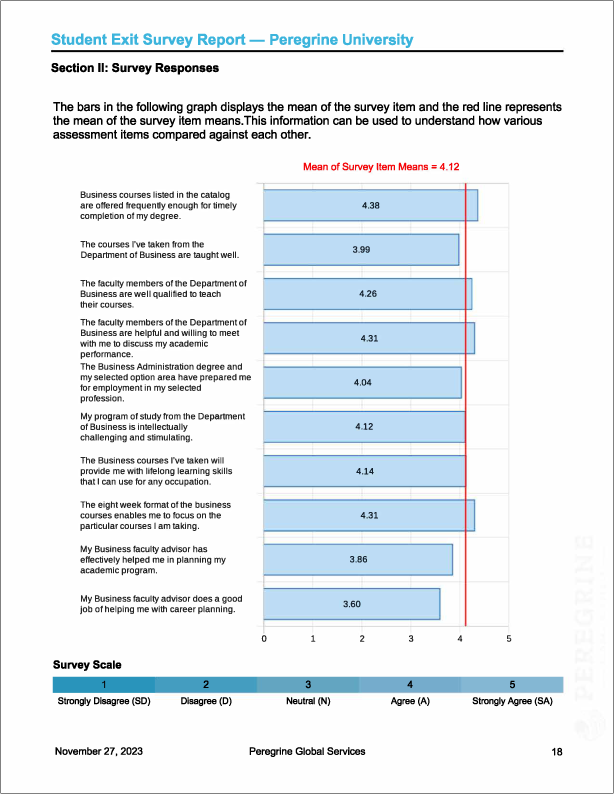

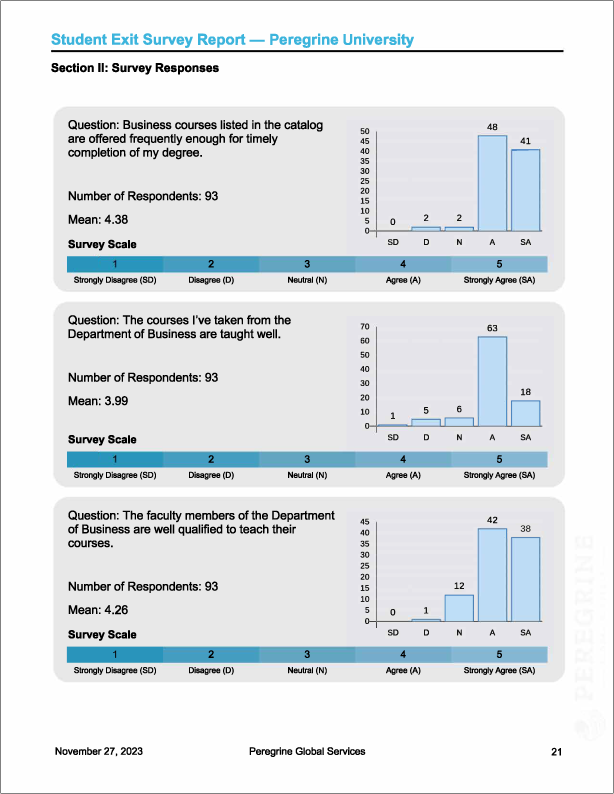

The revised survey employed a 5-point Likert scale, allowing students to quantitatively rate their perception of how well the learning goals were achieved. Additionally, the survey provided an opportunity for open-ended responses, enabling students to express their thoughts on program satisfaction more freely and comprehensively. This format was particularly aligned with ACBSP Standard 3, which emphasizes Student and Stakeholder Focus, ensuring that the feedback process was not only thorough but also relevant to accreditation standards. See Figures 2 & 3, which include an example of how survey data is presented. In the report, responses to specific questions regarding the frequency of course offerings, quality of instruction, and faculty qualifications are visualized in bar graphs, indicating the levels of agreement or disagreement among respondents.

By embedding the survey into an existing and mandatory component of the program, the university effectively addressed the issue of low response rates. This approach also streamlined the data collection process, making it more sustainable and less intrusive.

Most importantly, the data collected through this enhanced survey method offered invaluable insights for continuous improvement in both undergraduate and graduate programs. By capturing students’ perceptions and experiences in real-time and in a more structured manner, Utica University could make data-driven decisions to enhance the quality of education.

If you’re looking to create an effective program evaluation survey, incorporating diverse and insightful questions is essential. Here’s a selection of questions across various categories that you might find useful. You can adjust these to fit the specific context and objectives of your program.

↓ Download 70 student survey questions you can use in your program evaluation survey.

Measure 3: Program-level Achievement

Utica University’s use of Peregrine’s External Comparison Report enabled a robust evaluation of their MBA program’s performance. This process involved assessing internal learning outcomes and positioning these outcomes within a broader context by comparing them to peer institutions categorized under ACBSP Region 1. Such a comparison allowed Utica to identify areas of excellence and those needing enhancement.

At the MBA level, Utica outperformed the peer category for its four foundational MBA program goals. This information, shared on the university website, provides the public with evidence of successful student outcomes that bolsters the institution’s reputation and supports recruitment efforts.

CamEd Business School

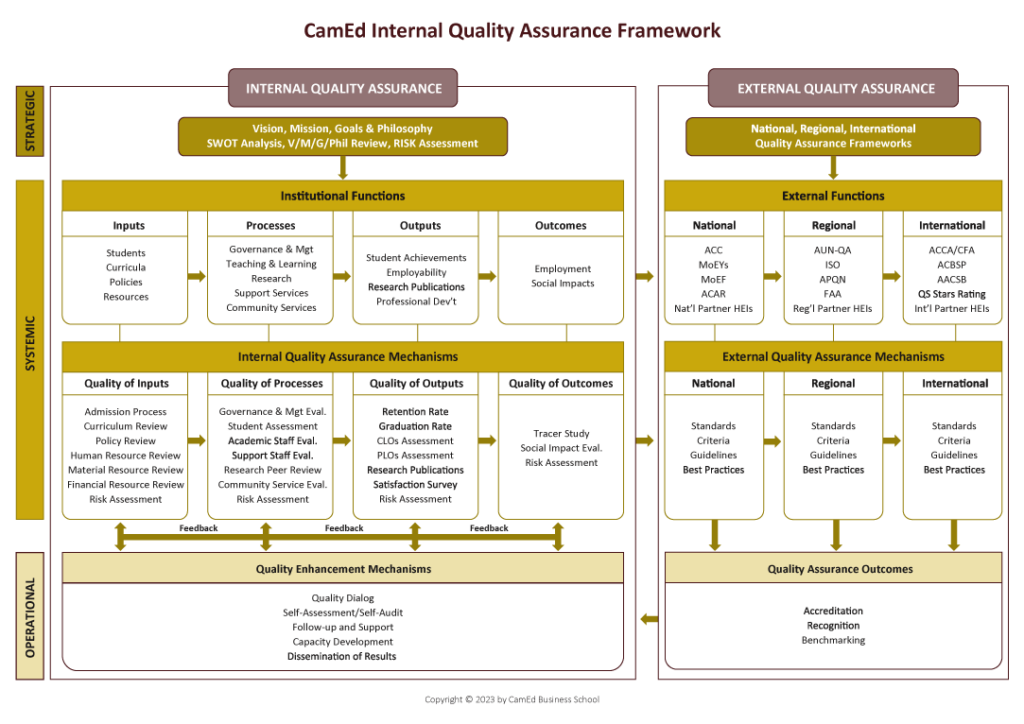

CamEd Business School represents a paradigm shift in higher education quality assurance. Focusing on the development of a robust Internal Quality Assurance (IQA) system, CamEd’s practices align with international standards, addressing the unique challenges of compulsory external quality assurance in Cambodia.

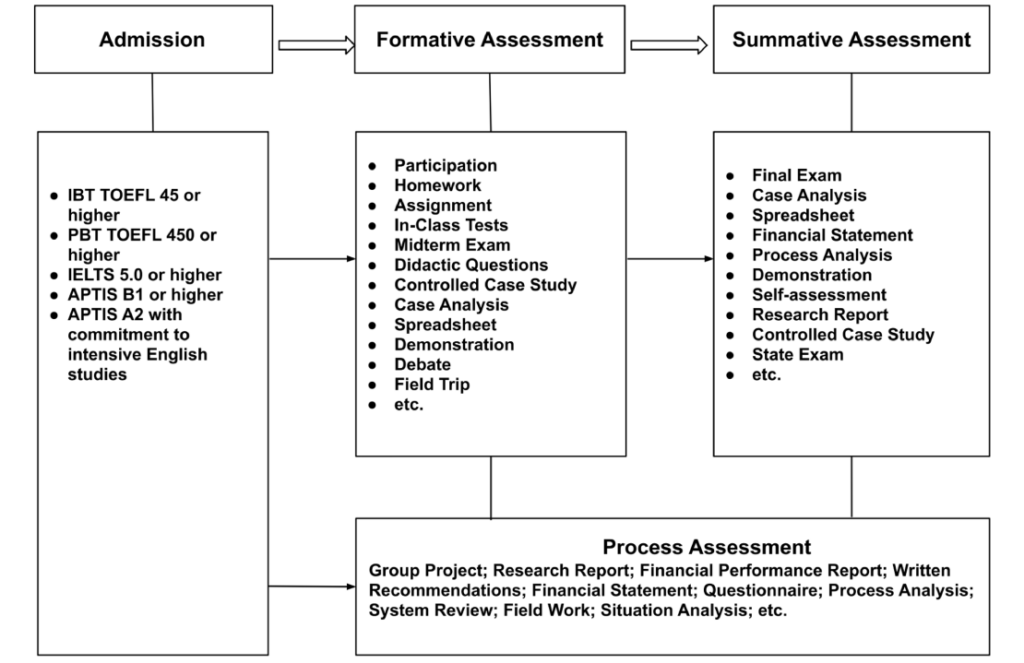

See Figure 4 for a visual of CamEd’s Internal Quality Assurance Framework.

This case study explores the school’s comprehensive approach to quality assurance, curriculum development, faculty evaluation, and learning assessment — an approach that led to six certifications and accreditations between 2019 and 2022. The school developed a multi-faceted approach. In this case, we will examine their approach to curriculum development and learning outcomes assessment.

Curriculum Development Process

CamEd Business School’s Curriculum Development Process is a strategic and iterative cycle that ensures the quality and relevance of its educational programs. It begins with curriculum design, where the school establishes clear program objectives, learning outcomes, and course specifications. This phase incorporates input from external professionals and considers stakeholders’ needs, ensuring that the curriculum remains aligned with industry standards and student aspirations. In the curriculum implementation phase, the focus shifts to the practical delivery of the curriculum, including teaching and learning activities, extracurricular activities, research opportunities, and community services, which enriches the student experience and fosters practical skills. The curriculum evaluation stage involves comprehensive reviews of curriculum, policies, resources, and various aspects of governance and staff performance, underpinning a culture of continuous improvement.

The results of this process serve as critical indicators of the framework’s effectiveness. Metrics such as retention, graduation, and employment rates provide quantitative evidence of success. Additionally, the frequency and caliber of research publications offer insight into the scholarly impact of the curriculum, while evaluations of social impact reflect the program’s contribution to society. These results are not the end point but a vital component of a continuous feedback loop, informing the next cycle of planning and action. This systematic approach ensures that CamEd’s curriculum is subject to regular scrutiny and refinement and aligned with the evolving needs of the global job market and societal expectations.

Learning Outcomes Assessment

CamEd Business School’s Learning Assessment Process delineates a structured approach to evaluating student progress and program effectiveness. Figure 5 shares the full picture of CamEd’s Learning Assessment Process. The process initiates at admission, setting academic benchmarks with English proficiency requirements. It then transitions into formative assessment, encompassing a broad spectrum of evaluative components such as participation, homework, in-class tests, and case studies, ensuring continuous student engagement and learning. Moving forward, summative assessment captures the culminating understanding and application of knowledge through final exams, financial statement spreadsheets, and self-assessment, amongst other methods.

At the core of this framework is the learning outcomes assessment, where faculty and staff review the alignment of course delivery with Course Learning Outcomes (CLOs) each semester. This step is complemented by the students’ self-evaluation of Program Learning Outcomes (PLOs), promoting self-reflection and responsibility for their learning. The results from these assessments are instrumental in determining if the program’s requirements are being met and where improvements can be made. This comprehensive assessment strategy ensures that CamEd measures student performance against established criteria and engages students actively in the assessment process, leading to a more enriched learning experience and ensuring that graduates are well-equipped to meet professional demands.

Harris-Stowe State University

Harris-Stowe State University in St. Louis, an esteemed HBCU, showcases the power of collaboration. Their partnership with organizations and industry players ensures their students have the knowledge and experiences essential for success. The HSSU Anheuser Busch School of Business casts a wide net to work with organizations that provide benchmarking data on student performance and with industry partners who offer experiences that bolster student success.

The first part of the HSSU process involves regular collection of direct and indirect measures of student achievement, followed by reflection on the data and recommendations for improvement from the Dean with faculty input. Peregrine’s knowledge-based assessment provides a nationally normed, direct measure of student learning and is administered every fall and spring by faculty who teach the capstone course. Results from the exam are analyzed and benchmarked against national and local programs to ensure continuous improvement in all curricula and programs.

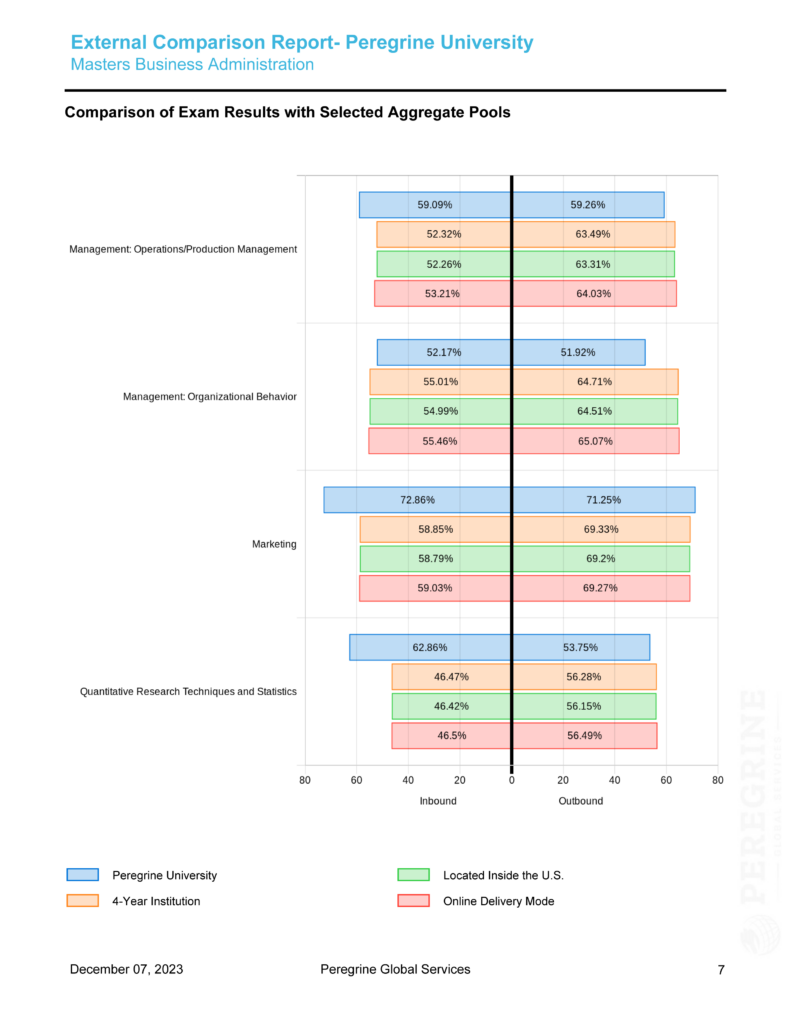

See in Figure 6 an example of a page from Peregrine’s External Comparison Report. In this example, the performance of MBA students is analyzed and compared against selected aggregate pools. For example, in the category of Marketing, the report showcases those students from Peregrine University outperformed their counterparts from other 4-year institutions and online delivery modes, as reflected in the Inbound and Outbound Exam scores. In this case, the background of incoming students could inform the higher scores.

Indirect measures gauge students’ perceptions, attitudes, or self-reported data about learning experiences or outcomes. While indirect measures do not directly assess student performance, they provide valuable insights into students’ experiences and perceptions. HSSU’s indirect measure comes from satisfaction surveys administered annually, semi-annually, or bi-annually. Data collected from the surveys are submitted to the Office of Institutional Assessment for analysis and results. The exit exam and the student surveys aid in faculty deliberation of needed modifications and inform curriculum and program offerings changes.

The second contributor to HSSU’s success is its collaboration with the community and industry partners to expand opportunities to its business education students. MECCA, or the Minority Entrepreneurship Collaborative Center for Advancement, launched five new events and programs for students and the community in the past year and contributed nearly $93K to the area economy through small business consultant fees, curriculum development, and startup funding. By ensuring that the programs deliver quality education through rigorous, national benchmarking and by articulating partnerships with area businesses, the Anheuser Busch School of Business at Harris-Stowe State University is helping students enter their professional careers with the knowledge and innovative experiences they need to succeed.

Point Loma Nazarene University

Point Loma Nazarene University, located on the oceanfront just outside San Diego, California, was a 2020 recipient of a “best practice” self-study designation across all standards of their ACBSP reaffirmation. At PLNU’s Fermian School of Business (FSB), a big part of their success comes from their focus on building a quality culture. Quality culture in higher education refers to the collective and ongoing commitment of an institution to uphold and enhance the standards of academic excellence and operational effectiveness. This culture is characterized by a shared set of attitudes, values, goals, and practices that emphasize continuous improvement and excellence in all aspects of the educational experience.

First, PLNU’s faculty are committed to the FSB assessment process and highly involved in assessing student learning outcomes each academic year, using the following process:

- The faculty member teaching a course where a program learning outcome (PLO) is mastered collects and compiles artifacts in an Assessment Management System.

- All FBS full-time faculty members participate in a mandatory, two-day assessment workshop each year, observing student achievement across disciplines and offering their expertise on instructional methods to continuously improve.

- All faculty have the chance to provide feedback to the FSB Assessment Committee based on opportunities for improvement identified through the workshops.

- The FSB Assessment Committee meets at the beginning of the fall semester to review the assessment reports. Changes are made as necessary.

A key highlight of this case study is the persistent approach to continuous improvement, as exemplified by the evolution of the MBA PLO to Students will be able to evaluate the impact of business decisions in a global context. This PLO was adopted in 2016, and in the first three years that it was assessed, the program could not show that students were consistently meeting or exceeding the target for this outcome. In 2018, minor curriculum changes were made, and case study questions were modified. In 2019, more curriculum changes were made, but students were still not demonstrating mastery of the PLO.

Undeterred, faculty persisted in their improvement efforts and redesigned their curriculum. They added a new international business case study as a tool for assessment. After three years of minor changes, the final redesign produced positive results: the average scores for each rubric criterion exceeded the target. The FSB Assessment Committee leveraged faculty input, which resulted in a stronger curriculum and better student learning outcomes. This was a lesson in the value of persistence: not lowering the standard or taking shortcuts but relying on faculty to provide solutions that enhance student learning. The success was a direct result of their commitment to a quality culture, which was only possible due to the buy-in from the faculty. This case study provides us with some valuable strategies we can use to gain buy-in from others.

- Maintain openness regarding the assessment process by sharing data collection methods, analysis, and decision-making to foster trust and clarity.

- Keep faculty and staff informed about data insights and their impact on educational strategies through consistent updates using various communication channels.

- Encourage a participatory approach by including faculty and staff in decisions, enhancing their engagement and endorsement of outcomes.

- Equip educators with the necessary training to understand and apply assessment data, highlighting its relevance to their roles.

- Illustrate the effectiveness of data-driven choices with real-world instances where they’ve enhanced instructional approaches.

- Listen and respond constructively to faculty and staff feedback regarding the assessment process, which may involve process modifications, further explanations, or additional training.

- Facilitate the adoption of data-driven improvements by offering resources, professional development opportunities, or mentorship programs.

Building a quality and continuous improvement culture is an iterative process that involves engaging and equipping faculty and administrators with the tools and understanding necessary to embrace data-driven decision-making. (Assurance of Learning, 2024).

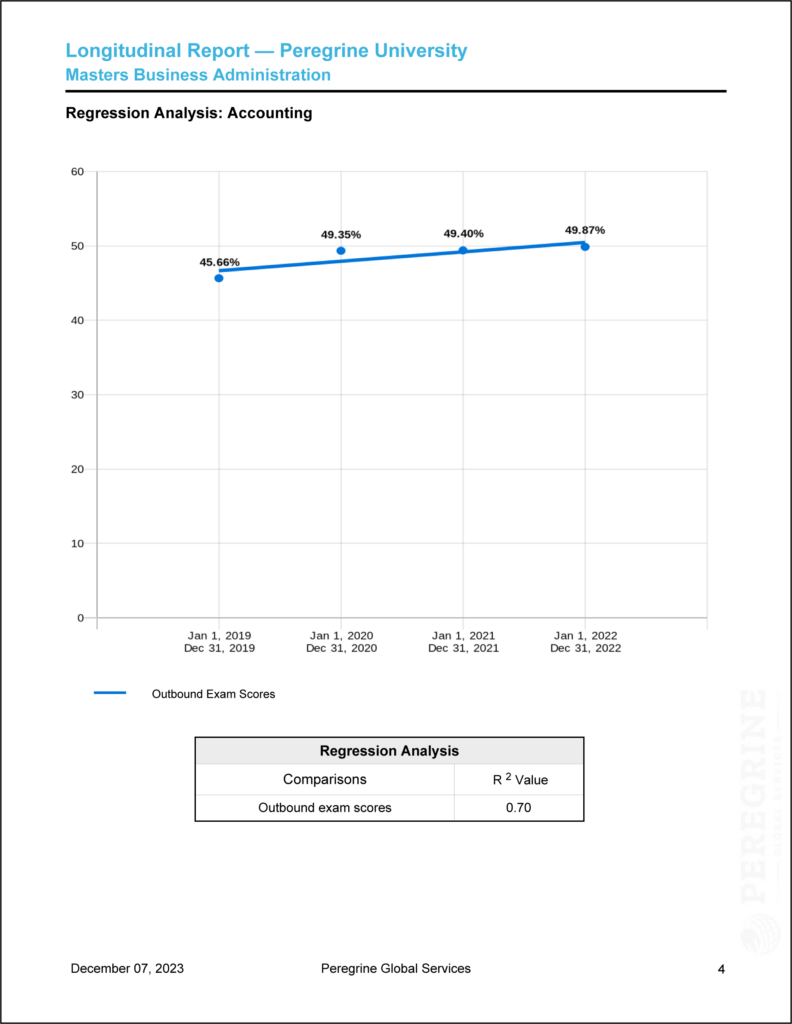

To help schools determine change over time, Peregrine provides a Longitudinal Report to their institutional partners. See Figure 7 for an example page from this report. The Longitudinal Report is a side-by-side comparison of the same exam over different exam periods. Up to four exam periods can be shown on the report. The report is most often used to evaluate academic change and to understand trends over time as in the case with Point Loma Nazarene University.

Conclusion

Through partnerships with Peregrine and other organizations, these institutions have embedded effective assessment processes into their programs, utilizing data to measure and enhance student achievement.

Utica University in New York has tailored its approach to prioritize key indicators of success. By implementing both inbound and outbound assessments, Utica has gauged student progress comprehensively. CamEd Business School is a testament to integrating a rigorous Internal Quality Assurance system, aligning with international standards, and addressing local educational challenges. Their structured approach to curriculum development and learning outcomes assessment has led to commendable accreditations and certifications.

Harris-Stowe State University exemplifies the power of collaboration through its HSSU Anheuser Busch School of Business. Regularly collecting a blend of direct and indirect measures of student performance, the university has formed strategic partnerships that provide invaluable benchmarking data and practical experiences vital for student success. Finally, Point Loma Nazarene University’s Fermanian School of Business’ focus on continuous improvement is evident in the evolution of their MBA program’s learning outcomes, showcasing the positive impact of persistence and faculty-led solutions on student learning.

These case studies collectively highlight the importance of a systematic, data-informed approach to quality in higher education. They demonstrate the value of stakeholder involvement, transparency, communication, and the effective use of data to evaluate and significantly improve educational outcomes.