General education forms the foundation of a well-rounded academic experience, equipping students with essential skills and knowledge that prepare them for success in their professional and personal lives. These competencies—from written communication and quantitative reasoning to scientific and social ways of knowing—are integral to developing critical thinkers, effective communicators, and lifelong learners.

Effectively assessing these outcomes ensures that academic programs meet their intended goals. Beyond simply measuring what students know and can do, assessment is a powerful tool for continuous improvement. By collecting and analyzing data on student performance, institutions can identify strengths, address gaps, and make informed decisions to enhance curriculum and the student experience. However, finding the right tools to achieve this balance between customization, accessibility, and actionable insights can be a challenge. This article will explore how Ivy Tech Community College adopted a new assessment tool to measure its general education competencies efficiently and effectively.

The Challenges of General Education Assessment

Assessing general education outcomes is a complex and resource-intensive task for higher education institutions. Central to this challenge is aligning assessments with institutional goals and accreditation standards. Accrediting bodies, such as the Higher Learning Commission (HLC) and other institutional or specialized accreditors, often require clear evidence of how general education competencies are being met and improved over time. However, many institutions lack streamlined tools to assess these outcomes efficiently.

Additionally, resource constraints frequently hinder effective assessment. According to a 2018 report by the National Institute for Learning Outcomes Assessment (NILOA), institutions often struggle to allocate sufficient time, funding, and personnel to sustain robust assessment practices. Faculty are commonly tasked with designing, administering, and scoring assessments, which can detract from teaching and research responsibilities (NILOA, 2018).

Ivy Tech’s Struggles with Outdated Tools

Dr. Kristina Collins, Assistant Vice President for Assessment and Accreditation at Ivy Tech Community College, described the struggles Ivy Tech faced in its early approach to assessment. At one point, the institution utilized the Collegiate Assessment of Academic Proficiency (CAAP), a proctored, paper-based exam widely recognized for its standardized approach to measuring general education outcomes. However, as the needs of students and institutions evolved, certain logistical and operational aspects of CAAP presented challenges.

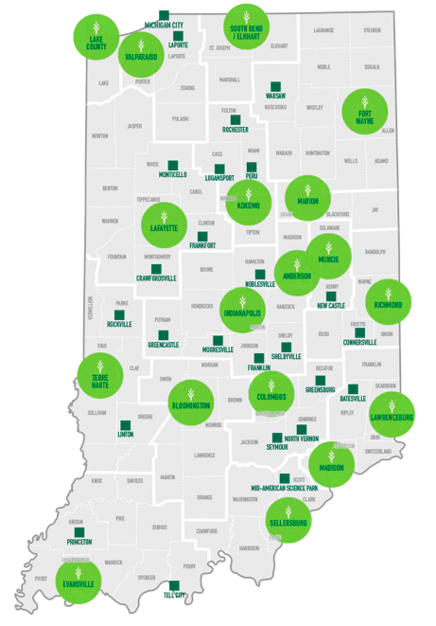

Students, including those enrolled in online programs, were required to visit campus testing centers to complete the exam, which presented access challenges for certain populations. Non-traditional students, who often balance work, family, and education, as well as geographically dispersed learners, faced additional hurdles due to this requirement. Given Ivy Tech Community College’s scale as the largest singly accredited community college system in the United States (19 major campuses, 40 statewide locations, and over 75 programs), ensuring equitable access for such a diverse student body required innovative approaches.

Aside from access issues, the CAAP’s data was processed off-site, and results were delivered via CD. Often, by the time the data became available, it was moot; it was too late to inform decision-making or curriculum adjustments. Finally, administrative burdens were significant: testing centers were responsible for collecting, coding, and sending paper forms for analysis.

When CAAP was discontinued in 2018, Ivy Tech sought to address these issues by developing homegrown assessment tools. Faculty were tasked with designing assessments for specific competencies, such as written communication and quantitative reasoning, and creating rubrics to measure student performance. While this approach provided some level of customization, it also introduced new obstacles:

- The assessment process placed a heavy burden on faculty, requiring them to manually score rubrics and analyze results. This not only detracted from their primary teaching and research responsibilities, but also made it challenging to maintain engagement and motivation among students.

- Despite general education assessment being mandated, participation rates remained low. Faculty found it challenging to motivate students to take the assessments seriously, resulting in incomplete or unreliable data.

These limitations highlighted the need for an accessible, online solution like Peregrine’s General Education Assessment.

Meeting Ivy Tech’s Needs: Assessing Indiana’s Six Core Competencies

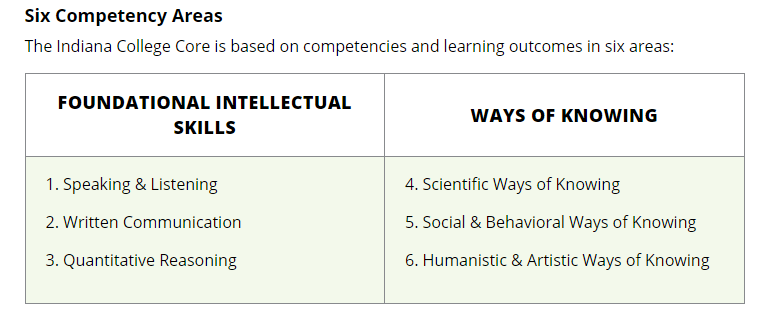

Customization was a primary need for Ivy Tech to adequately assess the six core competencies mandated by Indiana’s College Core. These competencies encompass foundational intellectual skills, including written communication, quantitative reasoning, speaking and listening, and humanistic, scientific, and social ways of knowing. Together, they form the backbone of academic and career readiness for students.

Peregrine’s customizable General Education Assessment addressed this need by offering 31 general education topics, allowing Ivy Tech to consolidate all six competencies into a single, streamlined tool. This approach significantly reduced administrative burdens while providing a comprehensive view of student performance across all required areas. Dr. Kristina Collins emphasized the tool’s adaptability, stating, “This is customized to your program, administered online without the requirement of a proctor… and this can be integrated directly into your learning management system. You can also choose to have a grade book posting automatically once a student completes the assessment.”

The online nature of Peregrine’s assessment further transformed Ivy Tech’s approach to evaluating outcomes. By eliminating many logistical challenges associated with traditional proctored exams (e.g., requiring students to visit campus testing centers), the fully online administration enhanced accessibility for students while reducing administrative overhead. Though proctoring is not required, institutions that prefer it can still utilize external proctoring services, ensuring flexibility to meet institutional preferences.

This flexibility was particularly impactful for Ivy Tech’s diverse and geographically dispersed student population. The ability to customize and administer assessments online improved participation rates and supported a more sustainable assessment process. By integrating

directly into learning management systems and offering features like automated gradebook posting, Peregrine’s solution provided an efficient way for Ivy Tech to meet its assessment goals without compromising security or data integrity.

Finally, another standout aspect of Ivy Tech’s experience with Peregrine was the efficiency and ease of implementation. Despite the institution’s size and complexity, the timeline for adopting Peregrine’s assessment tool was remarkably short. After initiating discussions with Peregrine in October 2022, the institution implemented a pilot in January 2023, an impressive timeline for a system of their size. Dr. Collins said, “In higher education, I’ve been around since 1998… things don’t tend to move very quickly. But look at this time frame, we went from starting discussions in October to a January implementation of a pilot… This agility was a testament to Peregrine.”

Leveraging Data for Continuous Improvement

The purpose of assessment is to collect data for quality assurance and continuous improvement purposes. Peregrine’s assessment offers a suite of 16 reports designed to address different institutional needs, from granular student-level analysis to high-level program evaluations. Key reports include:

Individual-Level Reports: These reports provide detailed insights into each student’s performance, including topic and subject-level scores, percentile rankings, and time spent on the assessment. Institutions can even track how students navigate the exam, flagging potential issues with engagement or effort.

Programmatic Reports: These reports provide a higher-level view of program outcomes while allowing institutions to dig into the data to reveal insights. Some of the programmatic reports offered include:

- External Analysis/Benchmarking Data: Compare your institution’s performance against peer institutions to identify strengths and areas for improvement.

- Internal Analysis: Dive deeper into specific topics or subject areas to uncover trends and highlight opportunities for targeted enhancements.

- Longitudinal Analysis Reports: By comparing up to four datasets over time, this report helps institutions evaluate the impact of curriculum changes and measure improvements in student learning outcomes.

- Gap Analysis Reports: A comprehensive tool for analyzing trends, this report combines subject-level data, comparative analysis, and response distractor insights to highlight knowledge gaps and suggest targeted interventions.

For Ivy Tech, these tools have been transformative. The ability to access real-time data has allowed the institution to make informed decisions quickly. By disaggregating data by campus, delivery mode (e.g., online vs. face-to-face), or even specific programs, administrators can pinpoint exactly where students excel and where additional support might be needed. For example:

- In quantitative reasoning, Ivy Tech identified specific subtopics where students were struggling and introduced targeted projects and discussion posts to address these gaps.

- Real-time reporting also helped Ivy Tech monitor response rates during pilot phases, letting them implement communication strategies that dramatically increased student participation.

- The ability to filter out unreliable data, such as assessments completed in less than 10 minutes, further ensured that the insights derived from Peregrine’s reports were accurate and actionable.

Dr. Collins summed it up by saying, “We don’t have to wait for the CD to come in the mail… The ability to break down our different groups… and see what campuses or programs are excelling versus those that might need more support has been critical.”

Peregrine’s comparative analysis tools add an extra layer of value for institutions. Accreditation bodies such as the Higher Learning Commission (HLC) often require schools to demonstrate how their performance compares to peer institutions. Peregrine’s reports facilitate these comparisons by providing aggregate data from institutions with similar demographics, delivery modes, or accreditation bodies. “A lot of our accreditation… wants to have some comparative analysis. How are you doing in comparison to other institutions? And the Peregrine reporting allows us to do that.”

General Education Assessment with Peregrine

Sustainable and scalable assessment of general education outcomes is essential for ensuring undergraduate programs are developing critical thinkers, skilled communicators, and lifelong learners. Institutions like Ivy Tech have demonstrated how leveraging online, customizable tools like Peregrine’s General Education Assessment can address challenges such as accessibility, resource constraints, and actionable data insights.

Ready to transform your institution’s assessment process? Discover how Peregrine’s General Education Assessment can help you enhance your programs, empower your faculty, and improve student outcomes. Contact us today to learn more and take the first step toward meaningful, data-driven improvement.

Reference:

National Institute for Learning Outcomes Assessment (NILOA). (2018). Assessment that matters: Trending assessment reform and quality improvement. Retrieved from https://www.learningoutcomesassessment.org/wp-content/uploads/2019/02/2018SurveyReport.pdf